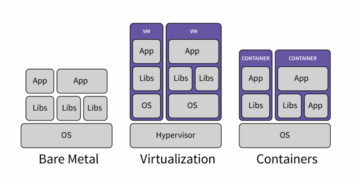

The evolution of cloud computing has been a continuous journey toward abstraction—moving from managing physical hardware (on-premises) to managing virtual machines (Infrastructure as a Service or IaaS), and then to managing containers (Platform as a Service or PaaS). The Serverless paradigm represents the final, most profound leap in this evolution, fundamentally altering how applications are built, deployed, and scaled. In the Serverless model, the core focus shifts entirely to the business logic, as the cloud provider assumes full responsibility for provisioning, scaling, patching, and managing the underlying infrastructure. The term “Serverless” is deliberately provocative; servers still exist, but the developer is no longer concerned with their existence or maintenance.

This comprehensive deep dive will explore the transformative impact of Serverless computing, dissecting its core technical mechanics, its profound economic advantages, and the architectural shifts it mandates. We will analyze the core services, primarily Functions as a Service (FaaS), and detail how Serverless enables unprecedented agility, eliminates idle cost, and fosters a culture of event-driven architecture. Understanding the rise of Serverless is crucial for technologists and business leaders who seek to optimize operational efficiency and maximize the pace of digital innovation.

1. Defining the Serverless Core: FaaS and BaaS

The Serverless movement is defined by two primary service models, both designed to remove infrastructure management from the user’s responsibility.

A. Functions as a Service (FaaS)

FaaS is the most common interpretation of Serverless, where developers upload small, discrete units of code—functions—that execute only in response to specific triggers.

-

Execution Model: Code execution is event-driven. The function is triggered by an event (e.g., a file upload to storage, an HTTP request, a new database entry, or a timer).

-

Managed Execution Environment: The provider automatically provisions the necessary compute resources to run the code, and manages the operating system, runtime environment, and scaling logic.

-

Billing Granularity: The user is billed only for the time the function is actively executing, typically rounded to the nearest millisecond. This eliminates the cost of idle compute time, which is a major expense in VM and container models.

-

Ephemeral Nature: The execution environment is temporary. Once the function completes, the environment is torn down, promoting security and cost efficiency.

B. Backend as a Service (BaaS)

BaaS encompasses fully managed cloud services that handle common backend application functions without requiring the user to provision or manage any servers for them.

-

Core Function: Provides ready-to-use application services. Examples include fully managed databases (like AWS DynamoDB or Azure Cosmos DB), authentication services, and managed storage solutions (like AWS S3).

-

Operational Principle: BaaS services abstract away all server maintenance, scaling, and operational management. The user interacts with the service via APIs.

-

Strategic Importance: BaaS allows developers to build feature-rich applications entirely out of managed services, ensuring scalability and reliability are inherited from the cloud provider, rather than custom-built.

2. The Economic Transformation: Eliminating Idle Cost

The most immediate and compelling argument for Serverless adoption is the fundamental shift in cloud economics, moving toward ultimate cost efficiency.

A. Pay-Per-Execution Model

Traditional compute models (VMs or containers) operate under a fixed capacity model, where a server is always running and always costing money, even if it is idle. Serverless completely breaks this paradigm.

-

Zero Cost When Idle: If a function receives no traffic, the cost is zero. This is a massive financial advantage for applications with highly variable or infrequent usage patterns, such as internal tools, batch jobs, or applications with significant off-peak periods.

-

Granular Billing: The millisecond billing granularity of FaaS ensures that the cost is tied precisely to the work performed, offering the most accurate and fairest usage-based pricing in the industry.

-

Savings for Variability: For systems where traffic fluctuates dramatically, the cost saving comes from the ability to instantly scale down to zero capacity when not in use, rather than maintaining expensive minimum capacity.

B. Shift in Operational Expenditure (OpEx)

Serverless alters the nature of the internal IT OpEx by redirecting engineering effort away from maintenance.

-

Reduced Labor Costs: Eliminating the need to patch, update, secure, monitor, and right-size the underlying operating system and runtime environment frees up expensive DevOps and infrastructure engineers to focus on higher-value tasks: improving application logic, designing better features, and ensuring overall system resilience.

-

Total Cost of Ownership (TCO): When factoring in the reduced labor, the elimination of idle resources, and the automatic scaling capabilities, the TCO for Serverless applications is often significantly lower than manually managed IaaS or PaaS environments, despite potentially higher per-millisecond compute rates.

3. The Architectural Paradigm Shift: Event-Driven Design

Serverless enforces an event-driven architecture, which is critical for building highly decoupled and scalable applications.

A. Decoupling Through Events

In a Serverless system, services rarely communicate via direct, synchronous API calls. Instead, they interact via events.

-

Asynchronous Communication: A function completes its task and publishes an event (e.g., “UserRegistered,” “FileProcessed”) to a managed message queue or stream (like Kafka or Kinesis). Other services interested in that event subscribe to it and trigger their own functions.

-

Isolation: This decoupling means the services are isolated. The ‘Order Placement’ service does not need to know where the ‘Inventory Update’ service is, or if it is currently functioning. It simply publishes an event, dramatically improving system resilience and reducing cascading failures.

-

Increased Resilience: If a subscribing function fails, the message queue retains the event, allowing the function to retry processing later without affecting the service that originally published the event.

B. Microservices Enforcement

Serverless FaaS naturally drives a robust microservices architecture.

-

Granular Focus: The nature of a FaaS function—a small, single-purpose unit of code—forces developers to think in terms of small, isolated business capabilities, perfectly aligning with the microservices philosophy.

-

Independent Development: Teams can develop, deploy, and scale their functions completely independently of other teams’ functions, accelerating the overall release cycle.

4. Operational Benefits: Agility and Reliability

The Serverless model translates into decisive advantages in both the speed of development and the reliability of the production environment.

A. Unprecedented Agility and Time-to-Market

By abstracting away infrastructure concerns, developers can focus almost exclusively on writing business logic.

-

Faster Deployment: Deploying a new function is often a matter of uploading a few files, rather than configuring VMs, building containers, or setting up networking. This dramatically accelerates the time-to-market for new features and bug fixes.

-

Immediate Scaling: Scaling is built-in and instantaneous. The system can handle zero requests one second and thousands the next, without any manual intervention or pre-warming, providing immediate response to market demand.

B. High Reliability and Built-in High Availability (HA)

Serverless services inherently benefit from the cloud provider’s massive infrastructure investment.

-

Automatic Failover: Serverless functions are executed across multiple physical machines and Availability Zones (AZs) simultaneously. If one zone experiences a failure, the request is automatically routed to an instance in a healthy zone without service interruption.

-

Managed Patches and Updates: The cloud provider handles all operating system and runtime environment patching and security updates seamlessly, without requiring application downtime, removing a huge source of operational risk for the user.

5. Security in the Serverless World

Serverless fundamentally changes the Shared Responsibility Model for security, shifting the heavy burden of infrastructure protection to the cloud provider.

A. Reduced Attack Surface

By eliminating control over the operating system, the user also eliminates many potential security vectors.

-

No OS Access: Developers cannot SSH into the runtime environment or install unauthorized software, preventing common forms of lateral movement and malware injection. The entire operating system layer is managed and secured by the provider.

-

Ephemeral Execution: The temporary nature of the execution environment means that even if a successful attack occurred, the environment is rapidly shut down and replaced, limiting the persistence of the threat.

B. Granular Access Control

Serverless encourages and enforces the Principle of Least Privilege more strictly than other models.

-

Function-Specific Permissions: Each individual function can be granted a unique, granular Identity and Access Management (IAM) role that specifies only the exact resources it is allowed to interact with (e.g., Function A can only write to DynamoDB Table X; it cannot touch S3 Bucket Y). This limits the blast radius of any security vulnerability.

-

Integrated Security: Security scanning and monitoring are integrated directly into the Serverless platform, providing automatic logging and audit trails for every execution.

6. Challenges and Trade-offs of Serverless

While transformative, the Serverless model is not without its architectural and operational trade-offs, which require careful planning.

A. Vendor Lock-In

Serverless functions and BaaS services are tightly integrated with the specific cloud provider’s API and ecosystem.

-

Portability Concerns: Moving a complex Serverless application between major cloud providers (e.g., from AWS Lambda to Azure Functions) can be challenging because the event triggers, security models, and managed service APIs are unique to each vendor.

-

Mitigation: Organizations must carefully weigh the cost and operational benefits of deep integration against the potential long-term risk of vendor dependence.

B. The Cold Start Problem

When a FaaS function has not been called for some time, its execution environment needs to be initialized, which introduces a delay known as a “cold start.”

-

Performance Impact: For latency-sensitive applications (like fast-response APIs), this initial delay can negatively impact user experience.

-

Mitigation: Cloud providers offer features like provisioned concurrency or warming (periodically triggering the function) to keep execution environments active, but these methods add back a fixed cost, reducing the “zero-cost-when-idle” benefit.

C. Resource Constraints and Complexity

Serverless functions have technical limits on execution time, memory, and local disk space.

-

Long-Running Processes: Workloads that require sustained processing over a long period (e.g., complex video encoding, large-scale scientific simulations) are often better suited for traditional VMs or containers, as FaaS limits execution time (often to 15 minutes).

-

Debugging: Debugging distributed, asynchronous, and ephemeral functions across various managed services can be more complex than debugging a monolithic application on a single, persistent server. It relies heavily on centralized logging and tracing tools.

7. Serverless Architecture Patterns and Use Cases

Serverless is not just for web backends; it enables a wide array of powerful architectural patterns across the enterprise.

A. Web and Mobile Backends

Serverless FaaS and BaaS are ideal for powering scalable web and mobile applications.

-

API Endpoints: Using API Gateways to route HTTP requests directly to FaaS functions, creating highly scalable and cost-efficient RESTful APIs.

-

User Authentication: Leveraging BaaS services for user identity and authentication, removing the need to manage complex login infrastructure.

B. Data Processing Pipelines

Serverless is transformative for asynchronous data processing.

-

Image/Video Processing: A file uploaded to S3 triggers a FaaS function, which resizes the image or processes the video, and then writes the result back to storage. The function scales infinitely to handle sudden bursts of uploads.

-

Log and Stream Processing: FaaS can consume events directly from real-time data streams (like Kafka or Kinesis), processing logs, transforming data, and routing it to the appropriate data warehouse instantly.

C. IT Automation and DevOps

Serverless functions automate routine operational tasks.

-

Cost Monitoring: A scheduled function runs hourly, checks cloud resource utilization, identifies inefficient resources, and sends reports or even terminates unused resources.

-

Security Remediation: A security event (e.g., an S3 bucket setting is changed to public) triggers a function that automatically reverts the security setting and alerts the administrator, providing real-time defense.

8. The Future of Computing is Event-Driven

The rise of Serverless indicates a clear trajectory for cloud architecture: the increasing reliance on event-driven, fully managed, and hyper-abstracted services.

-

Serverless Containers: The integration of FaaS principles with container technology (e.g., AWS Fargate, Azure Container Instances) allows developers to run containers without managing the underlying VM, bringing the benefits of Serverless to containerized applications and bridging the gap between PaaS and FaaS.

-

The Rise of the Serverless Operator: The skill set of the modern cloud engineer is changing from server administration (patching, OS management) to platform integration (wiring services together, configuring IAM, optimizing cost, and debugging distributed flows).

-

Edge Computing: Serverless functions are increasingly being deployed at the Edge (closer to the user) via CDNs, allowing code to run globally with ultra-low latency, a critical requirement for global applications and IoT.

Conclusion: Code is the New Infrastructure

Serverless computing is not just a feature; it is a profound architectural and economic shift that redefines the relationship between developers and infrastructure. By adopting the FaaS model and leveraging integrated BaaS, organizations gain immediate access to unlimited scale, millisecond billing accuracy, and a radically simplified operational model.

The elimination of idle cost, combined with the automatic resilience and security inherent in the provider’s managed services, allows businesses to innovate faster, deploy features more frequently, and focus their engineering genius on solving customer problems. The ultimate promise of the cloud—computing as an invisible, limitless utility—is finally realized with Serverless. Focus on the code; the cloud handles the rest.