The shift to cloud computing—characterized by its rapid provisioning and pay-as-you-go model—has fundamentally changed how organizations acquire and consume IT resources. While the technical agility is undeniable, the financial model is often misunderstood, leading to significant waste and unpredictability in operational expenditure (OpEx). Cloud Financial Operations (FinOps) is a portmanteau of “Finance” and “DevOps,” representing a cultural practice that brings financial accountability and visibility to the variable spending of the cloud. It’s an operating model that unites technology, finance, and business teams to ensure that cloud spend is optimized, predictable, and aligned with business value. FinOps acknowledges that the cloud is a utility, and like any utility, its consumption must be managed and understood to maximize value. It is essentially an adaptive methodology for managing the economic complexity introduced by on-demand, elastic cloud resources.

This extensive guide provides a practical, in-depth introduction to the FinOps framework, detailing its core principles, defining its stakeholders, and outlining the structured, cyclical processes necessary for continuous cloud cost optimization. We will delve into strategies for gaining granular visibility, empowering engineering teams with data, and leveraging advanced pricing models to translate technological flexibility into maximum financial efficiency. Implementing FinOps is crucial for any cloud-driven enterprise aiming to control costs, increase predictability, and drive business value from its technology investments.

1. Defining the FinOps Framework and Principles

FinOps is defined by a set of core principles and a structured lifecycle that drives continuous improvement in cloud spending.

The Core Principles of FinOps

FinOps success is built upon a foundation of shared understanding and collaborative action across the organization.

-

Teams Must Collaborate: Financial success in the cloud requires active, daily collaboration between engineers, product owners, and finance professionals. Engineers need to understand cost drivers, and finance needs to understand the technical elasticity.

-

Decisions are Driven by Business Value: Cloud cost should be viewed not just as an expense, but as an investment. Optimization decisions must balance cost reduction with business goals, speed, and reliability.

-

Everyone Takes Ownership for Cloud Usage: Unlike the traditional data center, where IT owned the cost, in the cloud, every engineering team consuming resources is an active participant in managing the spending. Accountability is decentralized.

-

FinOps Reports Should Be Timely and Accessible: Financial data must be delivered quickly and in a format that is easily understandable by the respective consumer (e.g., engineers need granular usage data; finance needs aggregated forecasts).

-

A Centralized Team Drives FinOps: A dedicated FinOps team (or practice) is needed to establish standards, automate reporting, facilitate communication, and manage complex commitment pricing.

-

Optimize for the Variable Cost Model: Embrace the unique characteristics of cloud pricing (pay-as-you-go, elasticity) to drive efficiency, rather than treating the cloud like an expensive fixed-cost data center.

The FinOps Persona Triad

FinOps requires the active participation of three distinct persona groups, each with unique responsibilities and goals.

-

Engineering and Operations (The Execution): Focus on technical efficiency, rightsizing, architectural optimization, tagging, and implementing scale-down automation. Their goal is speed and resource efficiency.

-

Finance (The Governance): Focus on budgeting, forecasting, allocating costs, managing procurement (Reserved Instances/Savings Plans), and ensuring compliance. Their goal is financial predictability and auditability.

-

Business and Product Owners (The Value): Focus on prioritizing features, evaluating the return on investment (ROI) for cloud spend, and defining business value for the technology investments. Their goal is maximizing profit and product margin.

2. The FinOps Capability and Process Cycle

The FinOps framework operates as a continuous, cyclical process that ensures cost management is not a one-time audit but an ingrained operational practice. This cycle has three main phases: Inform, Optimize, and Operate.

Phase 1: Inform (Gaining Visibility and Allocation)

The primary objective is to make all cloud costs visible, accurate, and understandable to all relevant stakeholders.

-

Allocation and Tagging: The foundational step. Every cloud resource must be tagged with mandatory business and technical identifiers (e.g.,

application,owner,environment). This enables accurate allocation of cost to the responsible business unit or team. -

Ingestion and Reporting: Centralizing the raw billing data (which is often extremely complex) into a readable format. Utilizing native cloud cost tools (Cost Explorer, Billing Dashboards) and FinOps platforms to generate reports.

-

Shared Cost Management: Developing clear policies for handling costs that are shared across multiple teams (e.g., VPNs, core monitoring tools, shared Kubernetes clusters) and accurately distributing them back to the consuming teams (chargeback). This often requires the finance team to work closely with engineering to map technical costs to business use.

-

Budgeting and Forecasting: Establishing budget targets for teams and using historical consumption data and anticipated project growth to create accurate, data-driven financial forecasts. Accuracy should improve with each cycle.

-

Anomaly Detection: Implementing automated alerting mechanisms to notify teams immediately when spending deviates unexpectedly from the established baseline, preventing costly runaway processes.

Phase 2: Optimize (Achieving Savings and Efficiency)

This phase involves the technical execution of cost-saving strategies driven by the data uncovered in the Inform phase.

-

Rightsizing and Efficiency: Identifying and downgrading oversized compute, storage, and database instances to match actual utilization metrics. This is a continuous effort that requires engineers to analyze CPU, RAM, and I/O usage over extended periods (e.g., 90 days).

-

Commitment-Based Discounts: Managing the organization’s purchasing strategy for Reserved Instances (RIs) and Savings Plans (SPs) to cover predictable baseline consumption, maximizing long-term discounts without over-committing.

-

Automation and Scheduling: Implementing automation to turn off non-production environments (Dev/Test/QA) during off-hours (evenings and weekends) and utilizing features like Auto-Scaling to rapidly scale down idle resources to zero capacity when possible.

-

Waste Reduction: Actively identifying and cleaning up orphaned, unattached, or unused resources (e.g., unattached storage volumes, old snapshots, unassociated elastic IPs). This relies on automated scanning tools.

-

Storage Tiering: Implementing lifecycle policies to automatically move data from expensive high-performance storage to cheaper archival tiers based on access frequency, which optimizes data durability cost.

Phase 3: Operate (Sustaining Control and Improvement)

The final phase ensures that the cost efficiency gains are sustained and that the FinOps culture is embedded in daily operations.

-

Policy and Governance: Establishing guardrails and automated policies (Policy-as-Code) to prevent new resources from being deployed in a non-compliant or wasteful manner (e.g., blocking the launch of non-compliant instance types or enforcing mandatory tagging).

-

Performance Tracking: Monitoring key performance indicators (KPIs) related to cost efficiency, such as cost per customer, cost per transaction, or percentage of resources covered by commitment discounts, to measure the impact of optimization efforts.

-

Continuous Feedback Loop: Embedding cost metrics directly into engineering toolsets and dashboards (e.g., displaying the cost of a Kubernetes namespace next to its latency) to provide real-time feedback and encourage cost-aware development decisions.

-

Benchmarking and Standardization: Comparing unit economics across different teams or against industry standards to identify best practices and standardize cost-efficient reference architectures (golden images).

3. Practical Strategies for Compute Cost Optimization

Compute resources (VMs and containers) often represent the largest expense category. Optimization here is paramount and typically yields the quickest return on effort.

Dynamic Scaling and Elasticity

Embracing the cloud’s elasticity—the ability to grow and shrink capacity instantly—is the most fundamental way to save money on compute.

-

Time-Based Scheduling: Implement automated scripts (often managed by serverless functions) to halt all development, testing, and staging instances when engineers are not working. For a standard 40-hour work week, this alone can cut non-production costs by approximately 70%.

-

Metric-Driven Scaling: Configure Auto-Scaling Groups (ASGs) to scale based on application-specific metrics (e.g., the length of a processing queue, or concurrent active user sessions) rather than relying solely on generic indicators like CPU utilization, ensuring capacity perfectly matches real load.

-

Aggressive Scale-Down Policies: Set stringent policies that rapidly de-provision idle resources. Use scale-down cooldown periods that are shorter than the scale-up periods, prioritizing the quick return of unused capacity to the provider.

-

Predictive Scaling: Utilizing cloud provider tools that employ machine learning to predict upcoming traffic spikes (based on historical data) and provision capacity before the spike occurs, reducing reliance on expensive manual over-provisioning.

Leveraging Spot and Preemptible Instances

Utilizing surplus capacity provides the highest possible discount for suitable workloads, introducing massive cost savings with manageable risk.

-

Spot Instance Strategy: Use Spot Instances (AWS, Azure) or Preemptible VMs (GCP) for stateless, fault-tolerant workloads such as batch processing, data analytics, containerized microservices, and CI/CD pipelines. Discounts often range from 70−90% off the standard on-demand price.

-

Graceful Preemption Handling: Architect applications to tolerate interruption. For container workloads, this means ensuring the orchestrator (like Kubernetes) can automatically drain workloads from a machine receiving a preemption warning and redeploy them elsewhere instantly.

-

Diverse Instance Pools: Configure Spot purchasing strategies to bid across a wide variety of instance types, increasing the likelihood of securing capacity and decreasing the chance of simultaneous preemption of the entire fleet.

Serverless vs. IaaS Cost Trade-Offs

Choosing the correct compute model based on the workload’s consumption profile is critical for optimal cost performance.

-

FaaS (Functions as a Service): Ideal for intermittent, event-driven, or highly variable workloads. The billing granularity (often down to 1ms) eliminates the cost of idle time, making it exceptionally cost-effective for tasks that run briefly or sporadically.

-

Containers (PaaS/Orchestration): Cost-effective for stable, high-utilization, 24/7 workloads. The ability to achieve high density by packing many containers onto a few VMs, especially those covered by a Savings Plan, minimizes the per-unit cost.

-

Managed Container Platforms (Fargate): Use serverless container platforms for containerized workloads that are bursty but require the container model. This eliminates the cost and operational overhead of managing the underlying cluster VMs.

4. Storage, Database, and Network Cost Control

While smaller than compute, these cost categories often grow silently and require specialized optimization strategies, particularly due to the compounding effect of data retention and network egress fees.

Intelligent Storage Tiering

Matching the required access speed and frequency to the appropriate storage tier is a simple yet powerful optimization tactic.

-

Automated Lifecycle Management: Configure rules on object storage (S3, Azure Blob) to automatically transition data from expensive Hot (Standard) tiers to cheaper Infrequent Access tiers after 30 days of inactivity, and eventually to long-term Archive tiers (like Glacier) after 90 days. This requires no manual intervention after setup.

-

Volume Rightsizing: Actively monitor the storage usage of persistent volumes (EBS, Azure Disks) and resize them downwards when usage metrics show they are significantly over-provisioned, avoiding paying for unused allocated capacity.

-

Deleting Orphaned Resources: Implement automated cleanup routines to detect and delete orphaned resources: unattached volumes, expired or incomplete database snapshots, and unassociated Elastic IPs that still accrue charges.

Database Efficiency Strategies

Managed database services often have complex pricing based on I/O, storage, and instance size, making efficient operation critical.

-

Serverless Databases: Utilizing databases that automatically scale capacity and billing based on real-time transactional activity (e.g., AWS Aurora Serverless) is ideal for applications with unpredictable traffic patterns or significant downtime, eliminating expensive idle database costs.

-

Read Replicas for Offloading: For read-heavy applications, deploy less expensive Read Replicas across multiple Availability Zones. This allows traffic to be distributed, preventing the need to scale up the single, most expensive primary write-intensive database instance.

-

IOPS and Storage Review: Regularly audit the provisioned IOPS (Input/Output Operations Per Second) for high-performance disks. Many workloads are provisioned with more IOPS than they actually consume, leading to unnecessary spending on performance capacity that is never utilized.

Minimizing Egress Charges (Data Transfer Out)

Data transfer fees, particularly Egress (data moving out of the cloud provider’s network), are the most frequently cited “surprise” cost.

-

Locality Principle: Adhere strictly to the principle of data locality. Architect applications to keep components that exchange large volumes of data (like data processing engines and databases) within the same Region, or ideally, the same Availability Zone, to leverage free or low-cost inter-AZ transfer rates.

-

Caching and CDN Utilization: Deploy a Content Delivery Network (CDN) to cache static content globally. Serving content from the CDN’s edge network is often significantly cheaper than serving it directly from the origin server, drastically reducing the main source of egress charges from the VPC.

-

Inter-Cloud Data Strategy: If data must be transferred between cloud providers, explore specialized direct connectservices or high-volume transfer programs that can offer better rates than standard internet egress pricing.

5. Strategic Procurement: Commitment Management

The highest potential for long-term savings in the cloud is realized by strategically leveraging commitment discounts. This requires close collaboration between the Finance and Engineering teams to manage risk and maximize coverage.

Reserved Instances (RIs) and Savings Plans (SPs)

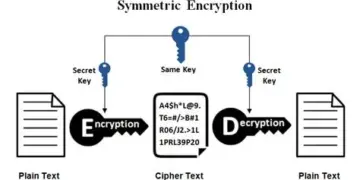

These programs offer deep discounts in exchange for a commitment to a certain amount of usage over a one- or three-year term.

-

Baseline Commitment Calculation: Accurately determine the absolute minimum, stable level of compute usage (the baseline) that the organization needs 24/7. Use historical data analysis to ensure the commitment does not exceed this baseline, keeping the peak capacity on the flexible on-demand pricing.

-

Savings Plan Flexibility: Favor Savings Plans over traditional RIs where possible, especially for compute. SPs commit to an hourly spending amount (e.g., $10/hour) and automatically apply the discount across different instance types, families, and even regions, offering much greater flexibility and reducing the risk of commitment wastage due to technical migration.

-

Centralized Commitment Management: Assign the management and purchase of RIs/SPs to the central FinOps team. This team can optimize the commitment pool across all accounts and departments, achieving the highest possible discount tier and ensuring the discount is applied to the entire organization.

-

Utilization Monitoring: Continuously monitor the utilization rate of RIs and SPs. Low utilization means wasted commitment (paying for something you are not using). Implement alerts to notify the FinOps team when utilization drops below a target threshold (e.g., 95%).

Leveraging Specialized Pricing and Programs

-

Licensing Optimization: For organizations heavily invested in Microsoft products, leverage specialized programs (e.g., Azure Hybrid Benefit) to bring existing on-premises licenses to the cloud, significantly reducing the cost of running Windows Server or SQL Server instances.

-

Developer Programs and Free Tiers: Actively utilize the free tiers and development credits offered by cloud providers for initial prototyping and small-scale testing to reduce preliminary project costs.

6. Sustaining the FinOps Culture: Governance and Automation

FinOps must be an enduring, automated discipline, not a one-time project. Governance ensures compliance and prevents cost drift, while automation provides the necessary speed.

Policy-as-Code (PaC) for Guardrails

Automate the enforcement of cost policies before resources are provisioned to prevent wasteful configurations from entering the environment.

-

Cost Guardrails: Implement PaC rules that prevent engineers from launching unbudgeted or highly inefficient resources, such as blocking the deployment of the most expensive instance families or requiring justification for launching resources without an associated commitment.

-

Tagging Enforcement: Mandate that all resources must be tagged correctly upon creation. PaC tools should automatically prevent the deployment of any resource that is missing the mandatory

ownerorcost_centertags, ensuring accurate allocation from day one. -

Lifecycle Governance: Automate policies that manage the lifecycle of resources, such as applying tags that set an automated deletion date (Time-To-Live, TTL) for development resources after a set period (e.g., 30 days).

Automated Cost Anomaly Detection

Set up proactive, intelligent systems to catch unexpected spending spikes quickly before they escalate into massive overruns.

-

Machine Learning Baselines: Utilize cloud-native machine learning tools that automatically learn the “normal” spending profile of each team and flag any significant, unusual deviations in real-time. This is far more effective than static budget thresholds.

-

Automated Response: Configure alerts to notify the responsible engineering team and, for high-risk anomalies, trigger automated response actions using SOAR (Security Orchestration, Automation, and Response) principles. This can include pausing a runaway function or capping the budget limit for the affected resource.

Integrating Cost Data into the Engineering Workflow

Shifting cost awareness “left” means embedding financial accountability directly into the tools and processes used by developers and operations staff daily.

-

Build-Time Estimation: Integrate tools into the Continuous Integration (CI) pipeline that provide an estimated cost for new infrastructure defined in IaC (Infrastructure as Code) templates. This allows developers to choose cost-efficient designs before the code is even deployed to the cloud.

-

Run-Time Visibility: Display cost metrics (e.g., the hourly cost of a microservice or Kubernetes namespace) alongside performance and reliability metrics in real-time monitoring dashboards, making cost an undeniable part of the operational feedback loop.

-

Regular Cross-Functional Reviews: Establish mandatory, frequent (e.g., weekly or bi-weekly) FinOps review meetings where Engineering, Finance, and Product teams collaboratively analyze recent cost reports, debate optimization trade-offs, and set priorities for the next optimization sprint.

Conclusion: FinOps as the Key to Cloud Value

FinOps is the crucial organizational practice that ensures the technological speed and elasticity of the cloud translate directly into tangible business value. It is not about simply cutting costs; it is about making informed, value-driven spending decisions. The core framework involves creating total visibility through rigorous tagging and allocation(Inform), empowering engineering teams with actionable cost data (Optimize), and securing long-term savings through strategic commitment management and automation (Operate). By successfully implementing the FinOps cycle and embedding cost accountability into their culture, organizations can eliminate waste, increase financial predictability, and truly harness the economic power of the cloud. FinOps is the financial operating model for the age of the cloud utility.